1 Preface

In the previous article about Spring Cloud Data Flow, the examples were operated through the UI. The Linux server environment generally uses the command line. The integration on Jenkins does not work with the UI either. The good thing is that the official Data Flow Shell tool is available and can be operated in command line mode, which is very convenient.

The Spring Cloud Data Flow Server provides an operational REST API, so the essence of this Shell tool is still to interact by calling the REST API.

2 Common operations

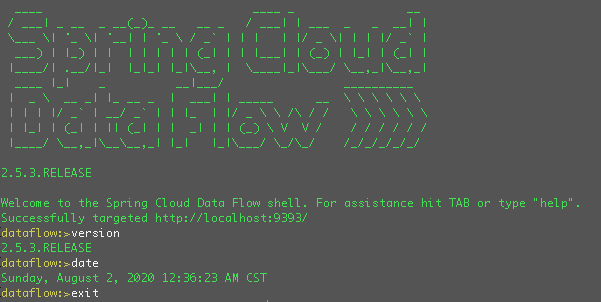

2.1 Startup

First make sure we have the Java environment installed and download the executable jar package: spring-cloud-dataflow-shell-2.5.3.RELEASE.jar.

Then start the application..

1

|

$ java -jar spring-cloud-dataflow-shell-2.5.3.RELEASE.jar

|

The default is the Server to which http://localhost:9393 is connected. This can be specified by -dataflow.uri=[address]. If you need authentication information, you need to add -dataflow.username=[user] --dataflow.password=[password].

For example, if we connect to the Server that was previously installed on Kubernetes as follows.

1

|

$ java -jar spring-cloud-dataflow-shell-2.5.3.RELEASE.jar --dataflow.uri=http://localhost:30093

|

2.2 Application operations

Introducing Application related operations.

List all currently registered apps.

1

2

3

4

5

6

7

8

|

dataflow:>app list

╔═══╤══════╤═════════╤════╤════════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════════╣

║ │ │ │ │composed-task-runner║

║ │ │ │ │timestamp-batch ║

║ │ │ │ │timestamp ║

╚═══╧══════╧═════════╧════╧════════════════════╝

|

To view information about an app.

1

2

3

4

5

6

7

8

9

10

11

12

|

dataflow:>app info --type task timestamp

Information about task application 'timestamp':

Version: '2.1.1.RELEASE':

Default application version: 'true':

Resource URI: docker:springcloudtask/timestamp-task:2.1.1.RELEASE

╔══════════════════════════════╤══════════════════════════════╤══════════════════════════════╤══════════════════════════════╗

║ Option Name │ Description │ Default │ Type ║

╠══════════════════════════════╪══════════════════════════════╪══════════════════════════════╪══════════════════════════════╣

║timestamp.format │The timestamp format, │yyyy-MM-dd HH:mm:ss.SSS │java.lang.String ║

║ │"yyyy-MM-dd HH:mm:ss.SSS" by │ │ ║

║ │default. │ │ ║

╚══════════════════════════════╧══════════════════════════════╧══════════════════════════════╧══════════════════════════════╝

|

Clear app registration information.

1

2

3

4

5

6

7

8

9

|

dataflow:>app unregister --type task timestamp

Successfully unregistered application 'timestamp' with type 'task'.

dataflow:>app list

╔═══╤══════╤═════════╤════╤════════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════════╣

║ │ │ │ │composed-task-runner║

║ │ │ │ │timestamp-batch ║

╚═══╧══════╧═════════╧════╧════════════════════╝

|

Clear all app registration information.

1

2

3

4

5

|

dataflow:>app all unregister

Successfully unregistered applications.

dataflow:>app list

No registered apps.

You can register new apps with the 'app register' and 'app import' commands.

|

Register an app.

1

2

3

4

5

6

7

8

|

dataflow:>app register --name timestamp-pkslow --type task --uri docker:springcloudtask/timestamp-task:2.1.1.RELEASE

Successfully registered application 'task:timestamp-pkslow'

dataflow:>app list

╔═══╤══════╤═════════╤════╤════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════╣

║ │ │ │ │timestamp-pkslow║

╚═══╧══════╧═════════╧════╧════════════════╝

|

Batch import of app, either from a URL or a properties file.

1

2

3

4

5

6

7

8

9

10

11

|

dataflow:>app import https://dataflow.spring.io/task-docker-latest

Successfully registered 3 applications from [task.composed-task-runner, task.timestamp.metadata, task.composed-task-runner.metadata, task.timestamp-batch.metadata, task.timestamp-batch, task.timestamp]

dataflow:>app list

╔═══╤══════╤═════════╤════╤════════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════════╣

║ │ │ │ │timestamp-pkslow ║

║ │ │ │ │composed-task-runner║

║ │ │ │ │timestamp-batch ║

║ │ │ │ │timestamp ║

╚═══╧══════╧═════════╧════╧════════════════════╝

|

Note that when registering or importing app, if it is duplicated, it will not be imported by default and will not be overwritten. If you want to overwrite it, you can add the parameter --force.

1

2

3

4

5

6

|

dataflow:>app register --name timestamp-pkslow --type task --uri docker:springcloudtask/timestamp-task:2.1.1.RELEASE

Command failed org.springframework.cloud.dataflow.rest.client.DataFlowClientException: The 'task:timestamp-pkslow' application is already registered as docker:springcloudtask/timestamp-task:2.1.1.RELEASE

The 'task:timestamp-pkslow' application is already registered as docker:springcloudtask/timestamp-task:2.1.1.RELEASE

dataflow:>app register --name timestamp-pkslow --type task --uri docker:springcloudtask/timestamp-task:2.1.1.RELEASE --force

Successfully registered application 'task:timestamp-pkslow'

|

2.3 Task operations

List task.

1

2

3

4

5

6

7

8

9

|

dataflow:>task list

╔════════════════╤════════════════════════════════╤═══════════╤═══════════╗

║ Task Name │ Task Definition │description│Task Status║

╠════════════════╪════════════════════════════════╪═══════════╪═══════════╣

║timestamp-pkslow│timestamp │ │COMPLETE ║

║timestamp-two │<t1: timestamp || t2: timestamp>│ │ERROR ║

║timestamp-two-t1│timestamp │ │COMPLETE ║

║timestamp-two-t2│timestamp │ │COMPLETE ║

╚════════════════╧════════════════════════════════╧═══════════╧═══════════╝

|

Deleting a task, here we are deleting a combined task, so we are deleting the child task as well.

1

2

3

4

5

6

7

8

|

dataflow:>task destroy timestamp-two

Destroyed task 'timestamp-two'

dataflow:>task list

╔════════════════╤═══════════════╤═══════════╤═══════════╗

║ Task Name │Task Definition│description│Task Status║

╠════════════════╪═══════════════╪═══════════╪═══════════╣

║timestamp-pkslow│timestamp │ │COMPLETE ║

╚════════════════╧═══════════════╧═══════════╧═══════════╝

|

Delete all tasks and you will be prompted for the risk.

1

2

3

4

5

6

7

8

|

dataflow:>task all destroy

Really destroy all tasks? [y, n]: y

All tasks destroyed

dataflow:>task list

╔═════════╤═══════════════╤═══════════╤═══════════╗

║Task Name│Task Definition│description│Task Status║

╚═════════╧═══════════════╧═══════════╧═══════════╝

|

Create a task.

1

2

3

4

5

6

7

8

|

dataflow:>task create timestamp-pkslow-t1 --definition "timestamp --format=\"yyyy\"" --description "pkslow timestamp task"

Created new task 'timestamp-pkslow-t1'

dataflow:>task list

╔═══════════════════╤═══════════════════════╤═════════════════════╤═══════════╗

║ Task Name │ Task Definition │ description │Task Status║

╠═══════════════════╪═══════════════════════╪═════════════════════╪═══════════╣

║timestamp-pkslow-t1│timestamp --format=yyyy│pkslow timestamp task│UNKNOWN ║

╚═══════════════════╧═══════════════════════╧═════════════════════╧═══════════╝

|

To start a task and check the status, you need to record the execution ID when you start it, and then query the status by the execution ID.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

dataflow:>task launch timestamp-pkslow-t1

Launched task 'timestamp-pkslow-t1' with execution id 8

dataflow:>task execution status 8

╔═══════════════╤═══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╗

║ Key │ Value ║

╠═══════════════╪═══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╣

║Id │8 ║

║Resource URL │Docker Resource [docker:springcloudtask/timestamp-task:2.1.1.RELEASE] ║

║Name │timestamp-pkslow-t1 ║

║CLI Arguments │[--timestamp.format=yyyy, --spring.datasource.username=******, --spring.datasource.url=******, --spring.datasource.driverClassName=org.mariadb.jdbc.Driver, ║

║ │--spring.cloud.task.name=timestamp-pkslow-t1, --spring.datasource.password=******, --spring.cloud.data.flow.platformname=default, --spring.cloud.task.executionid=8] ║

║App Arguments │ timestamp.format = yyyy ║

║ │ spring.datasource.username = ****** ║

║ │ spring.datasource.url = ****** ║

║ │spring.datasource.driverClassName = org.mariadb.jdbc.Driver ║

║ │ spring.cloud.task.name = timestamp-pkslow-t1 ║

║ │ spring.datasource.password = ****** ║

║Deployment │ ║

║Properties │ ║

║Job Execution │[] ║

║Ids │ ║

║Start Time │Sun Aug 02 01:20:52 CST 2020 ║

║End Time │Sun Aug 02 01:20:52 CST 2020 ║

║Exit Code │0 ║

║Exit Message │ ║

║Error Message │ ║

║External │timestamp-pkslow-t1-r27jvm6591 ║

║Execution Id │ ║

╚═══════════════╧═══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╝

|

View all task executions and view the execution log.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

dataflow:>task execution list

╔═══════════════════╤══╤════════════════════════════╤════════════════════════════╤═════════╗

║ Task Name │ID│ Start Time │ End Time │Exit Code║

╠═══════════════════╪══╪════════════════════════════╪════════════════════════════╪═════════╣

║timestamp-pkslow-t1│8 │Sun Aug 02 01:20:52 CST 2020│Sun Aug 02 01:20:52 CST 2020│0 ║

║timestamp-two-t2 │7 │Sat Aug 01 00:46:34 CST 2020│Sat Aug 01 00:46:35 CST 2020│0 ║

║timestamp-two-t1 │6 │Sat Aug 01 00:46:28 CST 2020│Sat Aug 01 00:46:28 CST 2020│0 ║

║timestamp-two │5 │Sat Aug 01 00:46:22 CST 2020│Sat Aug 01 00:46:23 CST 2020│1 ║

║timestamp-two │4 │Sat Aug 01 00:45:45 CST 2020│Sat Aug 01 00:46:37 CST 2020│0 ║

║timestamp-pkslow │3 │Wed Jul 29 16:57:19 CST 2020│Wed Jul 29 16:57:19 CST 2020│0 ║

║timestamp │2 │Wed Jul 29 15:41:40 CST 2020│Wed Jul 29 15:41:40 CST 2020│0 ║

║timestamp │1 │ │ │ ║

╚═══════════════════╧══╧════════════════════════════╧════════════════════════════╧═════════╝

dataflow:>task execution log 8

2020-08-01 17:20:48.967 INFO 1 --- [ main] trationDelegate$BeanPostProcessorChecker : Bean 'org.springframework.cloud.autoconfigure.ConfigurationPropertiesRebinderAutoConfiguration' of type [org.springframework.cloud.autoconfigure.ConfigurationPropertiesRebinderAutoConfiguration$$EnhancerBySpringCGLIB$$ccb7e6d0] is not eligible for getting processed by all BeanPostProcessors (for example: not eligible for auto-proxying)

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v2.1.13.RELEASE)

2020-08-01 17:20:49.200 INFO 1 --- [ main] c.c.c.ConfigServicePropertySourceLocator : Fetching config from server at : http://localhost:8888

2020-08-01 17:20:49.411 INFO 1 --- [ main] c.c.c.ConfigServicePropertySourceLocator : Connect Timeout Exception on Url - http://localhost:8888. Will be trying the next url if available

2020-08-01 17:20:49.411 WARN 1 --- [ main] c.c.c.ConfigServicePropertySourceLocator : Could not locate PropertySource: I/O error on GET request for "http://localhost:8888/timestamp-task/default": Connection refused (Connection refused); nested exception is java.net.ConnectException: Connection refused (Connection refused)

2020-08-01 17:20:49.415 INFO 1 --- [ main] o.s.c.t.a.t.TimestampTaskApplication : No active profile set, falling back to default profiles: default

2020-08-01 17:20:50.059 INFO 1 --- [ main] o.s.cloud.context.scope.GenericScope : BeanFactory id=206f72b7-a955-301c-9338-4db66c6fe95b

2020-08-01 17:20:50.130 INFO 1 --- [ main] trationDelegate$BeanPostProcessorChecker : Bean 'org.springframework.cloud.autoconfigure.ConfigurationPropertiesRebinderAutoConfiguration' of type [org.springframework.cloud.autoconfigure.ConfigurationPropertiesRebinderAutoConfiguration$$EnhancerBySpringCGLIB$$ccb7e6d0] is not eligible for getting processed by all BeanPostProcessors (for example: not eligible for auto-proxying)

2020-08-01 17:20:50.479 INFO 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Starting...

2020-08-01 17:20:50.663 INFO 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Start completed.

2020-08-01 17:20:51.602 INFO 1 --- [ main] o.s.c.t.a.t.TimestampTaskApplication : Started TimestampTaskApplication in 3.98 seconds (JVM running for 4.782)

2020-08-01 17:20:51.604 INFO 1 --- [ main] TimestampTaskConfiguration$TimestampTask : 2020

2020-08-01 17:20:51.626 INFO 1 --- [ Thread-5] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown initiated...

2020-08-01 17:20:51.633 INFO 1 --- [ Thread-5] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown completed.

|

2.4 Http requests

http requests can be made to.

1

2

3

4

5

6

7

|

dataflow:>http get https://www.pkslow.com

dataflow:>http post --target https://www.pkslow.com --data "data"

> POST (text/plain) https://www.pkslow.com data

> 405 METHOD_NOT_ALLOWED

Error sending data 'data' to 'https://www.pkslow.com'

|

2.5 Read and execute the file

First prepare a script file to put the Data Flow Shell command with the file name pkslow.shell with the following contents.

1

2

3

|

version

date

app list

|

executed, the output log is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

dataflow:>script pkslow.shell

version

2.5.3.RELEASE

date

Sunday, August 2, 2020 1:59:34 AM CST

app list

╔═══╤══════╤═════════╤════╤════════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════════╣

║ │ │ │ │timestamp-pkslow ║

║ │ │ │ │composed-task-runner║

║ │ │ │ │timestamp-batch ║

║ │ │ │ │timestamp ║

╚═══╧══════╧═════════╧════╧════════════════════╝

Script required 0.045 seconds to execute

dataflow:>

|

But actually we don’t want to start a shell command line and then execute a script in the pipeline of CI/CD. We want to do it in one step, execute it directly, and exit the shell command line when we’re done. There is a way to do this, by specifying the file at startup with --spring.shell.commandFile, separated by a comma , if there are multiple files. As follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

$ java -jar spring-cloud-dataflow-shell-2.5.3.RELEASE.jar --dataflow.uri=http://localhost:30093 --spring.shell.commandFile=pkslow.shell

Successfully targeted http://localhost:30093

2020-08-02T02:03:49+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - 2.5.3.RELEASE

2020-08-02T02:03:49+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - Sunday, August 2, 2020 2:03:49 AM CST

2020-08-02T02:03:49+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:309 -

╔═══╤══════╤═════════╤════╤════════════════════╗

║app│source│processor│sink│ task ║

╠═══╪══════╪═════════╪════╪════════════════════╣

║ │ │ │ │timestamp-pkslow ║

║ │ │ │ │composed-task-runner║

║ │ │ │ │timestamp-batch ║

║ │ │ │ │timestamp ║

╚═══╧══════╧═════════╧════╧════════════════════╝

$

|

After execution, it will not be in shell command line mode, but will fall back to the linux terminal. This is exactly what we need.

Let’s try preparing a register-application-create-task-execute script.

1

2

3

4

5

|

version

date

app register --name pkslow-app-1 --type task --uri docker:springcloudtask/timestamp-task:2.1.1.RELEASE

task create pkslow-task-1 --definition "pkslow-app-1"

task launch pkslow-task-1

|

executed, the output log is as follows.

1

2

3

4

5

6

7

|

$ java -jar spring-cloud-dataflow-shell-2.5.3.RELEASE.jar --dataflow.uri=http://localhost:30093 --spring.shell.commandFile=pkslow.shell

Successfully targeted http://localhost:30093

2020-08-02T02:06:41+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - 2.5.3.RELEASE

2020-08-02T02:06:41+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - Sunday, August 2, 2020 2:06:41 AM CST

2020-08-02T02:06:41+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - Successfully registered application 'task:pkslow-app-1'

2020-08-02T02:06:42+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - Created new task 'pkslow-task-1'

2020-08-02T02:06:51+0800 INFO main o.s.c.d.s.DataflowJLineShellComponent:311 - Launched task 'pkslow-task-1' with execution id 9

|

This way, we can automate packaging and deployment runs.

3 Some tips

The powerful shell tool provides many commands, you don’t need to remember them all, you can see them all with the help command.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

|

dataflow:>help

* ! - Allows execution of operating system (OS) commands

* // - Inline comment markers (start of line only)

* ; - Inline comment markers (start of line only)

* app all unregister - Unregister all applications

* app default - Change the default application version

* app import - Register all applications listed in a properties file

* app info - Get information about an application

* app list - List all registered applications

* app register - Register a new application

* app unregister - Unregister an application

* clear - Clears the console

* cls - Clears the console

* dataflow config info - Show the Dataflow server being used

* dataflow config server - Configure the Spring Cloud Data Flow REST server to use

* date - Displays the local date and time

* exit - Exits the shell

* help - List all commands usage

* http get - Make GET request to http endpoint

* http post - POST data to http endpoint

* job execution display - Display the details of a specific job execution

* job execution list - List created job executions filtered by jobName

* job execution restart - Restart a failed job by jobExecutionId

* job execution step display - Display the details of a specific step execution

* job execution step list - List step executions filtered by jobExecutionId

* job execution step progress - Display the details of a specific step progress

* job instance display - Display the job executions for a specific job instance.

* quit - Exits the shell

* runtime apps - List runtime apps

* script - Parses the specified resource file and executes its commands

* stream all destroy - Destroy all existing streams

* stream all undeploy - Un-deploy all previously deployed stream

* stream create - Create a new stream definition

* stream deploy - Deploy a previously created stream using Skipper

* stream destroy - Destroy an existing stream

* stream history - Get history for the stream deployed using Skipper

* stream info - Show information about a specific stream

* stream list - List created streams

* stream manifest - Get manifest for the stream deployed using Skipper

* stream platform-list - List Skipper platforms

* stream rollback - Rollback a stream using Skipper

* stream scale app instances - Scale app instances in a stream

* stream undeploy - Un-deploy a previously deployed stream

* stream update - Update a previously created stream using Skipper

* stream validate - Verify that apps contained in the stream are valid.

* system properties - Shows the shell's properties

* task all destroy - Destroy all existing tasks

* task create - Create a new task definition

* task destroy - Destroy an existing task

* task execution cleanup - Clean up any platform specific resources linked to a task execution

* task execution current - Display count of currently executin tasks and related information

* task execution list - List created task executions filtered by taskName

* task execution log - Retrieve task execution log

* task execution status - Display the details of a specific task execution

* task execution stop - Stop executing tasks

* task launch - Launch a previously created task

* task list - List created tasks

* task platform-list - List platform accounts for tasks

* task schedule create - Create new task schedule

* task schedule destroy - Delete task schedule

* task schedule list - List task schedules by task definition name

* task validate - Validate apps contained in task definitions

* version - Displays shell version

|

If you are only interested in a specific class of commands, you can get help by using the help xxx method.

1

2

3

4

5

6

7

8

9

10

11

|

dataflow:>help version

* version - Displays shell version

dataflow:>help app

* app all unregister - Unregister all applications

* app default - Change the default application version

* app import - Register all applications listed in a properties file

* app info - Get information about an application

* app list - List all registered applications

* app register - Register a new application

* app unregister - Unregister an application

|

The shell also supports the tab keycompletion command.

4 Summary

This article has a lot of commands, and the results are posted. This will help you understand them better.

Reference https://www.cnblogs.com/larrydpk/p/13431123.html